Stable Diffusion stands as a cutting-edge deep learning model introduced in 2022, leveraging diffusion techniques. Its focal application lies in the realm of generating intricate images based on textual descriptions.

Nonetheless, its utility transcends to tasks encompassing inpainting, outpainting, and even orchestrating image-to-image transformations guided by textual prompts. The innovation took form through collaborative efforts from the CompVis Group at Ludwig Maximilian University of Munich and Runway, powered by computational contributions from Stability AI and training data sourced from non-profit entities.

Let's delve into a comprehensive exploration of its underlying mechanics, and if you're asking "Why is this understanding crucial?" Well, beyond its inherent fascination, delving into the inner workings can elevate your prowess as either a researcher or an artist. Mastery of this tool facilitates accurate utilization, thereby yielding results of heightened precision.

Distinguishing text-to-image from image-to-image generation, unraveling the significance of CFG value, deciphering the concept of denoising strength— we'll try to answer these questions and more in this article.

What is Stable Diffusion?

Stable Diffusion uses a kind of diffusion model (DM), called a latent diffusion model (LDM). Introduced in 2015, diffusion models are trained with the objective of removing successive applications of Gaussian noise on training images, which can be thought of as a sequence of denoising autoencoders.

Diffusion Models are generative models, meaning that they are used to generate data similar to the data on which they are trained. Fundamentally, Diffusion Models work by destroying training data through the successive addition of Gaussian noise, and then learning to recover the data by reversing this noising process. After training, we can use the Diffusion Model to generate data by simply passing randomly sampled noise through the learned denoising process.

Why are these models called diffusion model? Well, that's simply because because the mathematical equations used describes a phenomenon that looks very much like diffusion in physics (figure below). If you're interested in the details of the mathematical modelling you can refer to the Stable diffusion research paper or you can look for Score-based generative modeling.

|

| Training Stable Diffusion SDE modelling (Diffusion process) |

The Diffusion Process:

In the illustration above, we can distinguish 2 phases: Forward SDE & Reverse SDE. During the forward diffusion phase, a training image undergoes a gradual infusion of noise, progressively transforming it into an atypical noise-infused counterpart. This process holds the intriguing potential to even render recognizable cat or dog images as abstract noise patterns. Over time, the original distinction between these images and their animal origins becomes imperceptible, this part is crucial in training stable diffusion models.

To better understand the diffusion process, imagine a solitary droplet of ink descending into a clear glass of water. As moments pass, the ink diffuses seamlessly within the water, its distribution becoming entirely stochastic. The initial location of the ink droplet, whether at the center or periphery, eventually becomes indistinguishable.

Illustratively, consider the following depiction: an image subjected to forward diffusion. Witness how the image transmutes into a mosaic of random noise.

|

| Forward Diffusion |

Initiating from a noise-laden, seemingly devoid image, the process of reverse diffusion embarks on the task of resurrecting either a bird or a frog image – encapsulating the core concept.

From a technical standpoint, every diffusion procedure encompasses dual components: (1) drift and (2) random motion. The reverse diffusion component is oriented toward the retrieval of distinct bird or frog images, while omitting any intermediary states. This selective trajectory is responsible for the definitive outcome of either a bird or a frog image.

|

| Reverse Diffusion |

Advantages of Diffusion Models

The exploration of Diffusion Models has witnessed a rapid surge in recent times. Drawing inspiration from non-equilibrium thermodynamics, these models presently yield State-of-the-Art image quality.

Going beyond their outstanding image quality, Diffusion Models offer an array of advantages, notably their independence from adversarial training. The challenges posed by adversarial training are extensively acknowledged, and when non-adversarial substitutes demonstrate comparable performance and training efficiency, the prudent approach often leans toward their adoption. In terms of training efficiency, Diffusion Models boast the added perks of scalability and parallelizability.

Although the outcomes of Diffusion Models might appear to materialize effortlessly, their underpinnings rest upon meticulous and intriguing mathematical considerations. These choices and intricacies provide the bedrock for the achieved outcomes, and the field's optimal practices continue to evolve within the realm of scholarly literature.

The methodology behind the training process

The concept of reverse diffusion undoubtedly stands as a clever and elegant approach. Yet, the pivotal question remains: "How can this feat be accomplished?"

The key to reversing diffusion lies in quantifying the amount of noise introduced to an image. This entails instructing a neural network model to forecast the noise infusion, a role undertaken by the noise predictor within Stable Diffusion. A U-Net model assumes this responsibility.

|

| U-Net Architecture (source) |

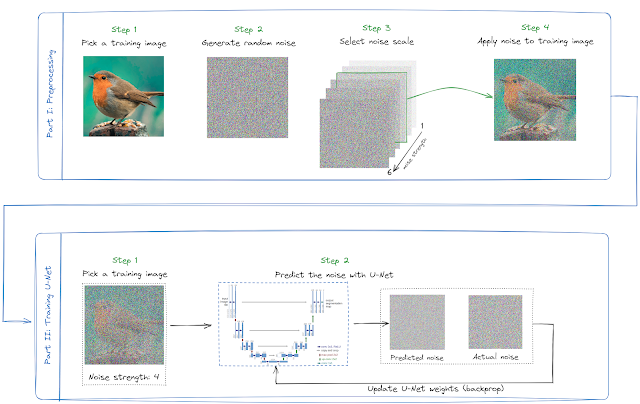

Briefly, the training procedure follows these steps:

- A training image is selected, such as a photograph featuring a bird.

- A randomized noise image is generated.

- Distort the training image by progressively integrating this noisy image over a specified sequence of iterations.

- The noise predictor (i.e. The U-Net) is trained to discern the magnitude of noise introduced. This entails fine-tuning its weights and acquainting it with correct responses through a supervised learning process.

|

| Simplified Unconditioned Training Process of Stable Diffusion Models |

|

| Unconditioned Training Process of Stable Diffusion Models |

|

| Full Stable Diffusion Training Process |

Generating Image in text-to-image and image-to-image framework:

- Stable Diffusion creates a random noise tensor within the latent space. You have the ability to manage this tensor by configuring the seed of the random number generator. By assigning a specific value to the seed, you ensure consistent generation of the same random tensor. This tensor, residing in the latent space, represents your image at this stage, yet it is exclusively comprised of noise.

- The noise predictor U-Net accepts both the latent noisy image and the accompanying text prompt as inputs. Employing reverse diffusion, it then forecasts the noise, also within the latent space.

- Subtract the latent noise from the latent image to obtain the updated latent image.

- Repeat the Step 2 and 3 until reaching the max denoising step chosen.

- Finally, the decoder of the Variational Autoencoder (VAE) translates the latent image back into the pixel space. This marks the image obtained upon executing Stable Diffusion.

These steps are equally applicable in the image-to-image generation process, differing by a significant aspect. In this case, the initial image subjected to reverse diffusion isn't a random noise tensor; rather, it's an image supplied by the user, deliberately imbued with a specific degree of noise indicated by the denoising strength chosen by the user.

In both text-to-image and image-to-image generation processes, the model retains the capability to be conditioned using a textual prompt. A vital determinant, the Classifier-Free Guidance (CFG) parameter wields substantial influence over the resulting image. This parameter's significance is underscored by its pronounced effect on the diffusion process, acting as a control mechanism for the extent to which the text prompt influences the generation.

When set to 0, the image generation transpires without heeding the prompt—rendering the process unconditioned. On the contrary, elevating the parameter value directs the diffusion process to align more closely with the provided prompt, thereby shaping the generated outcome.