Introduction:

The detection of fake faces or more generally fake (or photoshopped) images is relatively a simple task for the human eye, and that is because of the amazing ability of the human brain to detect manipulated patterns or patches in the image. So, basically, in case of fake faces detection, the human brain usually looks for the what seems to be manipulated or deformed areas of the image and by comparing that with what certain face features should look like, we can naturally make a prediction whether the image is of a fake or real face. However, there are cases where we can not make a certain decision, especially if the deformed features are on pixel level size or if the AI generated face checks all the normal face features for the human eyes, such as the last face image in the below. The figure below shows 6 fake faces with different degrees of difficulty to detect them.Convolutional neural networks have proven their robustness and efficiency in many computer vision problems, more particularly in image classification. So, we can train a convolutional neural network to classify fake and real faces with a very high accuracy score. However, what intrigued me most while training such network, is to discover how does such a neural network detect the fake face images? And more precisely, does the neural network detect the fake patterns and assign to them higher degree of pertinence when producing the prediction or not?

So, to answer this question, I discovered a whole new area in the AI domain, which is Explainable AI. Fundamentally, Explainable AI aims for discovering why an AI arrived at a specific decision. In Computer Vision, many techniques have been developed to allow us to take a look on the behavior of convolutional neural networks and to understand how they produce predictions.

Beside the understandability aspect, being able to translate calculations made by a neural network into visuals that can be understood by humans, helps us improve the performance of the model and avoid issues like; Age, sex, and ethnicity biases.

Some of the techniques that are developed for Computer Vision models interpretations are the following:

- SHAP or SHapley Additive exPlanations: It is described by its developer as follows “SHAP is a game theoretic approach to explain the output of any machine learning model. It connects optimal credit allocation with local explanations using the classic Shapley values from game theory and their related extensions.” Basically, the open source SHAP package provides tools that can be used with any simple or complex machine learning model to understand which features are most important for the model. SHAP can also be used with different types of models such as; Linear Regression, Logistic Regression, Gradient Boosting, SVMs, and Neural Networks. In computer vision, SHAP can also be used to visualize which part of the image are most important for a given prediction.

- Feature Maps: feature maps are used to visualize what a model is “seeing” through a set of convolutional filters. It makes it possible for us to understand how the learned filters are making the model recognizing a certain class. The implementation of this method is straightforward and can be don’t using any deep learning framework.

- Occlusion Sensitivity: It is another simple technique for understanding which parts of an image are most important for a deep network's classification. This method is used to visualize how parts of the image affects neural network's confidence by occluding parts iteratively.

- Grad-CAM or Gradient-weighted Class Activation Mapping: This method was introduced in 2016 by Ramprasaath R. Selvaraju et al. where they propose a technique for producing "visual explanations" for decisions from a large class of CNN-based models. Given an input image, this method is used to compute a heatmap for any convolutional layer of a CNN model. The heatmap represent the areas that the model mostly focused on when producing the predicted class.

Therefore, we will train a CNN model using a ResNet architecture to classify real and fake face images, then we’re going to use Grad-CAM and Guided Grad-CAM to visualize, which part of the input image was the model focusing on for the given class prediction. But before discussing the training process let’s take a quick look on the Grad-Cam algorithm.

Grad-CAM Algorithm:

The Gradient-weighted Class

Activation Mapping algorithm can be implemented in two phases; As portrayed in

the figure below,

the first phase consists in performing forward propagation and saving both the

output of the model (Class prediction), and the output of the Convolutional

layer, which is also known as the feature activation map.

In the second phase, as shown in the figure below, we can calculate the Grad-CAM heatmap following 3 steps:

- Calculating the derivatives of the predicted class with respect to the chosen feature activation map via backprop.

- Calculating the neuron importance weights by taking the global-average-pooling of the derivatives calculated in the previous step.

- Calculating the linear combination of the produced neuron importance weights and the feature activation map, then applying the ReLU function on the resulted tensor.

The result is then a heatmap that can be resized and displayed over the input image to visualize the most activated regions of the image for the class that’s been predicted.

As you may have noticed in the Grad-CAM algorithm illustration, there is a part that we haven’t discussed yet and it is about the Guided Grad-CAM, but you don’t need to worry about it for now, we’re going to discuss it later on in this article.

The results of any deep learning application depend heavily on the model architecture as well as on the data, so preparing a “good” dataset which meets a certain number of criteria is crucial to obtain the desired results.

Datasets & Training:

The model is trained for 20 epochs using the Adam optimization algorithm with an early-stopping and LR-reducer callbacks.

Results:

The trained model is then used to perform Grad-CAM and Guided Grad-CAM on a sample of images. But, we need first to know what is exactly the Guided Grad-CAM?Now, let's discuss the results obtained by our trained model. You can use this notebook on Kaggle to reproduce or check more results.

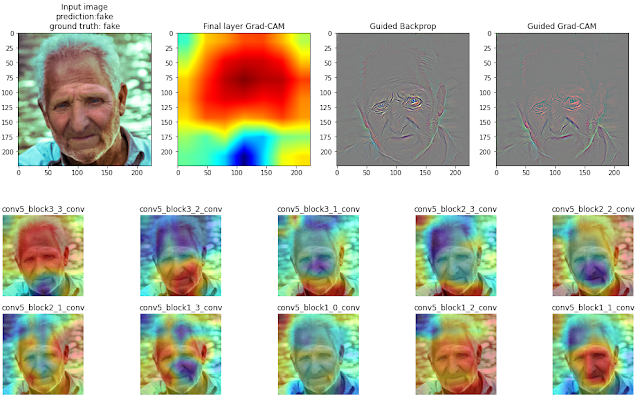

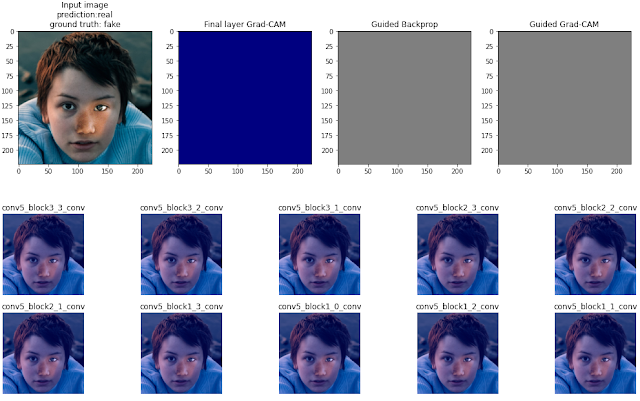

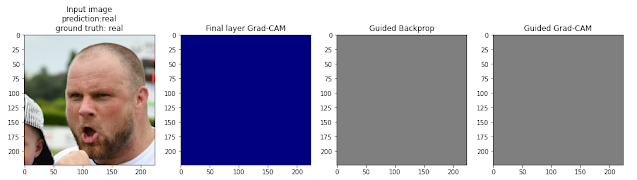

The results will be presented using some images as examples, with different levels of difficulties to the human eyes. The Grad-CAM heatmap is calculated and displayed for the last 10 convolutional layer of the model, whereas the guided backprop and guided Grad-CAM are only shown for the last convolutional layer of the model, which is the one that was fine tuned.

The model reached 0.85 average F1-score on the test set, so we’re going to look for both positive and negative examples and discuss each case further.

Obviously altered images:

This first image is a fake face image, that is easily detected by the human eyes, we can clearly see that the eyes and nose parts where manipulated. The Grad-CAM results on the last layer shows that the model is focusing on the upper region of the face with more emphasis on the boundary between the real part and manipulated part of the face.

Furthermore, we can see that in the guided backprop and Guided Grad-CAM, the pixels that has activated the convolutional layers the most are around the eyes region of the face, this can further confirm that the pixels that are on the manipulated part are the ones that are mostly activating the neurons to produce the correct prediction.

Hardly detected altered images:

In this second case, we're investigation the model performance on images that are harder to detect as fake by the human eyes. For instance the first as much as I tried to detect the issue with the example shown above, I couldn't come to the conclusion that it is a manipulated image. However, we can see that the model correctly labeled the image.

Real images:

Conclusion:

We’ve seen that using Grad-CAM we can investigate the model behavior and have a clear view on how it came to the predication that was made. Grad-CAM provided us with a visual way of testing the performance of a model, and by discussing the results of a sample of images, we can take notes on what the model fails to do and what it is more inclined towards when performing a prediction. Based on the remarks that we made, we can then make the right adjustments to increase the performance of the model.

In this case of fake face detection, we’ve seen that the model was able to behaved like human in some ways. The model successfully managed to focus on the face features in many cases, but it has failed to produce the correct results in others. One way to improve this model performance is to use more images of fake faces that includes, small distortions or manipulation of some areas of the face, and face images with fake backgrounds to make the model pay more attentions to smaller details on the face and to the image backgrounds with can be a great indicator of image manipulation.

The work is done on Kaggle, and a public notebook is available via this link.